SPARCs in Space: The Cobham UT700 Leon3FT Processor

In the 1990s the ESA began a project to develop their own, open source, easily usable processor for space applications. Before this the ESA had used mainly MIL-STD-1750A processors, both American made ones, or direct copies their of, such as the Dynex MAS281, a clone of the McDonnel Douglas MDC281. The ESA explored many different architectures, including the Motorola MC88K RISC process, the MIPS RISC processor, and AMD 29K RISC processor the SPARC, and somewhat oddly, even the National Semiconductor NS32k series processors (which at the time were fairly powerful and used a fair amount in embedded apps). The SPARC came out of this as the winner.

At the time the SPARC was a pretty widely used processor, and was being developed by multiple companies. It was defined as an architecture, and various companies could implement it how they saw fit, in various technologies. This is very much how the 1750A architecture was made to be as well. Considering this, the only two really viable architectures that wouldn’t (at that time) have been a sole source item, were the MIPS and the SPARC, both were used and made by many companies, but SPARC it was.

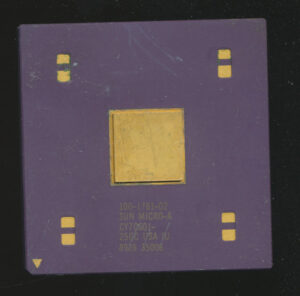

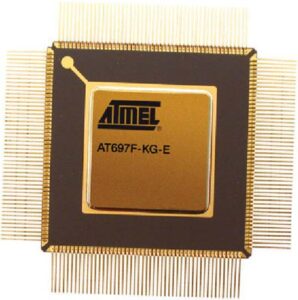

The first implementation was the ERC32 released in 1995, a early SPARC V7 3-chip implementation typically made on a 0.8u process. These were decent, but took 3 chips, were limited to 20MHz due to memory interface limitations, and were not particularly scalable. The ERC32 did fly to space, and was used on the ISS as one of the main control computers, as well as 10 other missions including the ESAs ATV resupply vehicles for the ISS. By 1998 the ERC32 was shrunk to 0.6u allowing it to be integrated onto a single chip (the Atmel TSC695). This became the standard ESA processor as well as being used by other nations, including China, Israel, India and even NASA.

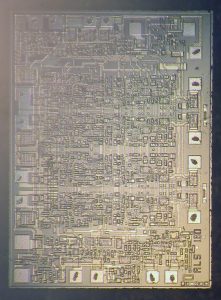

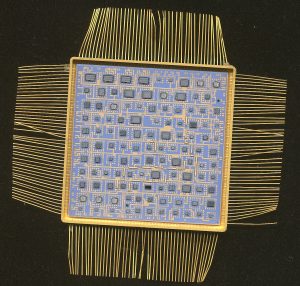

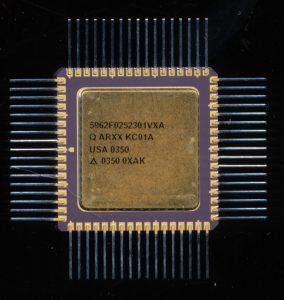

By the year 2000 the SPARC V7 architecture was rather long in the tooth, having been originally designed back in the 1980’s. The decision was made to upgrade to SPARC V8. SPARC V8 added integer multiply/divide instructions, as well as expanded the floating point from 80-bit to 128-bit. SPARC V8 became the basis for the IEEE 1754-1994 standard for what a 32-bit processor must do. This was important as it made a very clear definition for software as well, ESA wanted a processor whose support was very well known, and very well defined. The SPARC V8 implementation became the LEON (for Lion) processor. These used a 5-stage pipeline (Fetch, Decode, Execute, Memory, Write) and were made on a 0.35u process delivering around 50MIPS at 0.5W. It used around 100,000 gates on a 30mm2 die and was a fully Fault Tolerant design (unlike the ERC32). It was rated to handle 300Krad of ionizing radiation without upset.

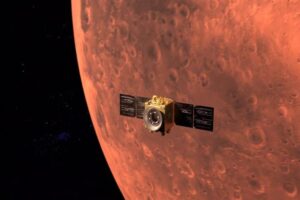

LEON2 was a fairly similar deign, it moved the MUL/DIV instructions into hardware (instead of emulating them on LEON1) and reduced the feature size down to 0.18u. It also added many on chip peripherals, such as a PC133 SDRAM controller (with Error detection/Correction) as well as a AMBA bus. It took around 0.6W at 100MIPS though some implementation saw speeds of up to 120MIPS at 0.3W). LEON2 saw use on many missions, including the camera controller for the Venus Express mission and the BepiColombo mission to Mercury. LEON2 was designed as a single function processor, but in the real world was often being used as a SoC (System on a Chip).

This led to the development of the LEON3 in 2004. It was originally made on a slightly LARGER process of 0.20u. It ran at around 150MIPS at 0.4W. Its biggest upgrades were moving from a 5-stage pipeline to a 7–stage pipeline (Fetch, Decode, Register Access, Execute, Memory, Exception, Write) as well as supporting multiprocessing. In realization of the actual use cases the LEON processors were seeing (as SoCs rather then as single processors) the LEON3 added a large array of peripherals. This included Spacewire, MIL-STD-1553 interfaces, DDR RAM controllers, USB controllers, 1G Ethernet MAC, and much more. All stuff that originally had to be added on to previous systems was now on chip.

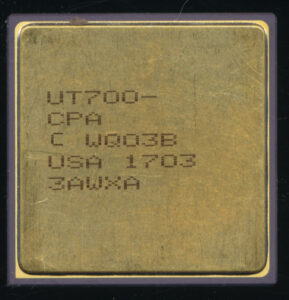

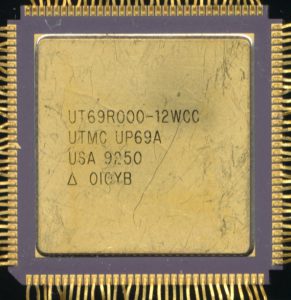

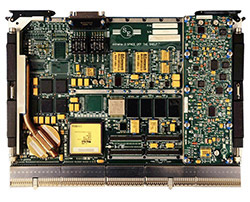

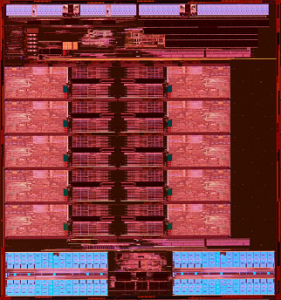

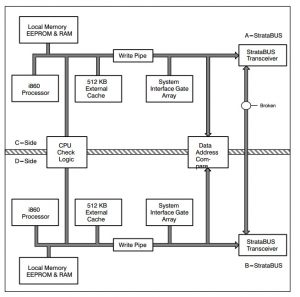

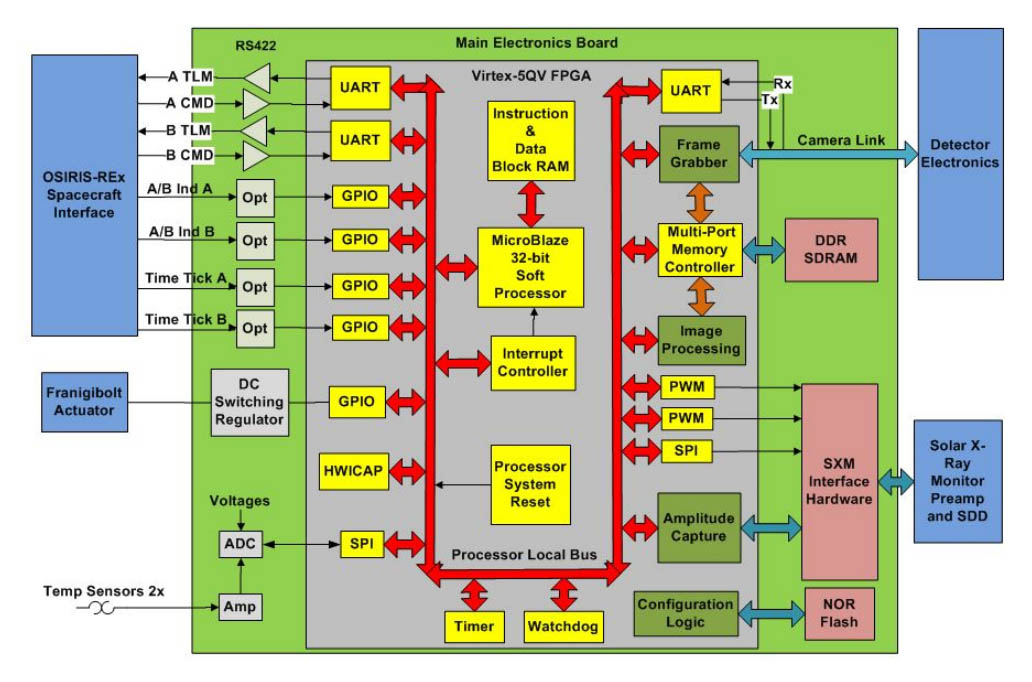

The entire design was good for 400MHz on a 0.13u process and used around 25,000 gates. Like the LEONs before it, the LEON3 was designed as a synthesizable device. You could implement the entire core in your on ASIC or FPGA, or buy an FPGA off the shelf already programmed as one (Aeroflex offers this option). You could also buy ready made processors implementing it, much like any other CPU. Cobham (now known as CAES Cobham Advanced Electronic Solutions) offers the UT700. The UT700 is a 166MHz processor implementing the full LEON3FT design. The ‘FT’ stands for Fault Tolerant, and adds a lot of error checking and correcting features on top of the base LEON3 design. Every bit of RAM on chip, from registers, to cache has error detection and correction. The UT700 includes 16K of Instruction and Data cache on chip as well as all the usual memory controllers and communication interfaces of the LEON3. It runs at 1.2-1.8V and and max performance dissipates 4W.

The LEON3FT powers the European Galileo navigation satellites, and many others, including the French Spot-6 Earth Observation craft. They also power each of the Iridium-NEXT communications satellites that began launching in 2017

Posted in:

CPU of the Day