The End is Near (of the year) – A Look Back at Y2K

Think back 19 years, the year is 1999 and in just a few days the world is apparently coming to an end due to programmers of the 60’s and 70’s deciding to save precious memory and use 2-digits for the year instead of 4. Or perhaps they just assumed that in 30-40 years we really wouldn’t be using the same systems. Either way the world (and by world we mean mainly the media) was prepared to go dark as everything technology driven ground to a halt as the clocks struck midnight. Kids pondered if this would mean an extended holiday break, while parents wondered if they would still have a job, or money in their computer controlled checking account.

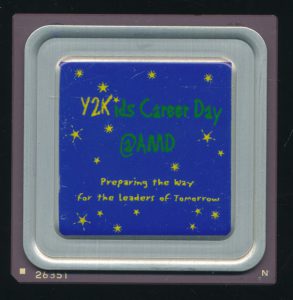

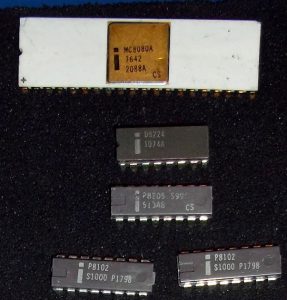

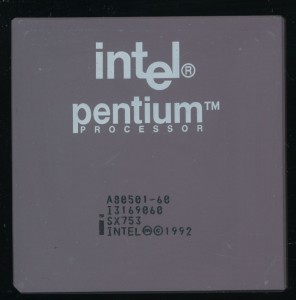

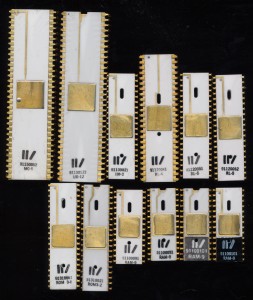

Thankfully (though perhaps looking back that is becoming murky to some) it was a complete non-even, life, and technology continued at a record pace. And who would want to miss it? The GHz war between AMD and Intel was neck and neck at the turn of the millennium, with AMD set to win it by a few days. This was the age of the Pentium 3, the Athlon and the K6-2. Technology was glamorous and some of its downsides seen today were relegated to sci fi movies. AMD and other companies held job fairs to acquire new talent, and also hosted Career Days for younger kids to see what went on in the exciting tech industry. This specially painted AMD K6-2 CPU was likely handed out during such an event, probably either in Austin, TX (where AMD had a large fab) or Santa Clara, CA. Its a NTK made package with a AMD package # 26351, the standard from 1998-2000 and used for most all late K6-2 CPUs. The child who likely would have received this, probably a middle schooler at the time would now be around 30, who knows how such an event affected them but it would be neat if they ended up working at AMD (or Globalfoundries) or at the very least sing an AMD powered computer.

Posted in:

CPU of the Day, Just For Fun