Making MultiCore: A Slice of Sandy

Intel Sandy Bridge-EP 8-core dies with 6 cores enabled. Note the TOP and BOTTOM markings (click image for large version)

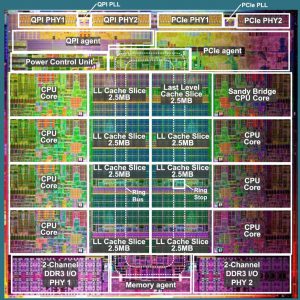

Recently a pair of interesting Intel Engineering Samples came to The CPU Shack. They are in a LGA2011 package and dated week 33 of 2010. The part number is CM8062103008562 which makes them some rather early Sandy Bridge-EP samples. The original Sandy Bridge was demo’d in 2009 and released in early 2011. So Intel was making the next version, even before the original made it to market. The ‘EP’ was finally released in late 2011, over a year after these samples were made. Sandy Bridge-EP brought some enhancements to the architecture, including support for 8-core processors (doubling the original 4). The layout was also rather different, with the cores and peripherals laid out such that a bi-direction communications ring could handle all inter-chip communication.

Sandy Bridge-EP 8-core die layout. Note the ring around the inside that provides communications between the peripherals on the top and bottom, and the 8-cores. (image originally from pc.watch.impress.co.jp)

Sandy Bridge EP supports 2, 4, 6 and 8 cores but Intel only produced two die versions, one with 4 cores, and one with 8 cores. A die with 4 cores could be made to work as a dual core or quad, and an 8-core die could conceivably be used to handle any of the core counts. This greatly simplifies manufacturing. The less physical versions of a wafer you are making, the better optimized the process can be made. If a bug or errata is found only 2 mask-sets need updated, rather then one for every core count/cache combination. This however presents an interesting question..What happens when you disable cores?

That is the purpose of the above samples, testing the effects of disabling a pair of cores on an 8-core die. Both of the samples are a 6-core processor, but with 2 different cores disabled in each. One has the ‘TOP’ six cores active, and the other the ‘BOTTOM’ six cores are active. This may seem redundant but here the physical position of the cores really matters. With 2 cores disabled this changes the timing in the ring bus around the die, and this may effect performance, so had to be tested. Timing may have been changed slightly to account for the differences, and it may have been found that disabling 2 on the bottom resulted in different timings then disabling the 2 on the top.

Ideally Intel wants to have the ability to disable ANY combination of cores/cache on the die. If a core or cache segment is defective, it should not result in the entire die being wasted, so a lot of testing was done to determine how to make the design as adaptable as possible. Its rare we get to see a part from this testng, but we all get to enjoy its results.

Tags:

Intel, Multicore, Sandy Bridge

Posted in:

CPU of the Day